build Digital Services

business Industry-Specific Solutions

- Medical & Dental Marketing

- Home & Trade Services

- Enterprise & Corporate Solutions

- Education & Non-Profit Marketing

- E-commerce & Retail Marketing

- Law Firm Marketing

- Real Estate Marketing

- Landscaping & Lawn Care

- Home Remodel & Construction Marketing

- Event Marketing

- Roofing Marketing

- Electrician Marketing

- Tinting & Detailing Marketing

trending_up Business Growth Strategies

web Website Design & UX/UI

- Ultra Consultants Website Optimizations

- Rodem UI/UX Case Study

- The Hairport Hair Salon Web Designer

- Metro Nova Website Revamp

- Whiting Indiana City Website

- Identity Aesthetics

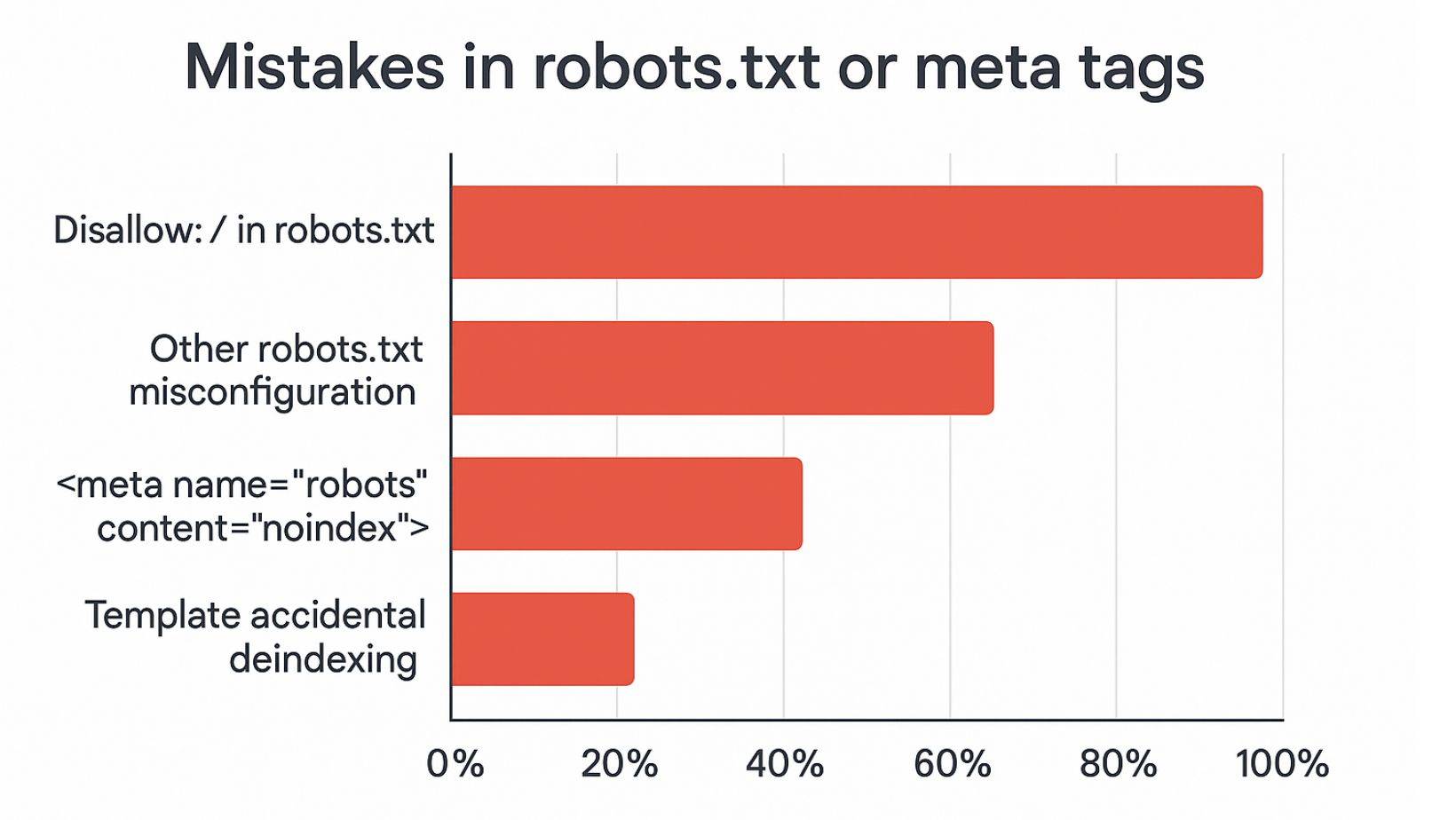

search SEO & Local SEO

campaign Google Ads / Paid Media

shopping_cart E-Commerce

trending_up Lead Generation & Digital Marketing

auto_fix_high Before & After / Transformation

memory Technology

Leverage our Nation Media Dashboard FX to track KPIs and turn your marketing data into clear action.

hub Methodology

Explore our proven methodology that aligns strategy, execution, and reporting to drive measurable growth.

groups Who We Are

Meet the Nation Media Design team behind the strategies, technology, and results for growing brands.

reviews Customer Reviews

See real client reviews and success stories from businesses that trusted us to scale their marketing.

🇺🇸 An American Company